RAG & Embeddings

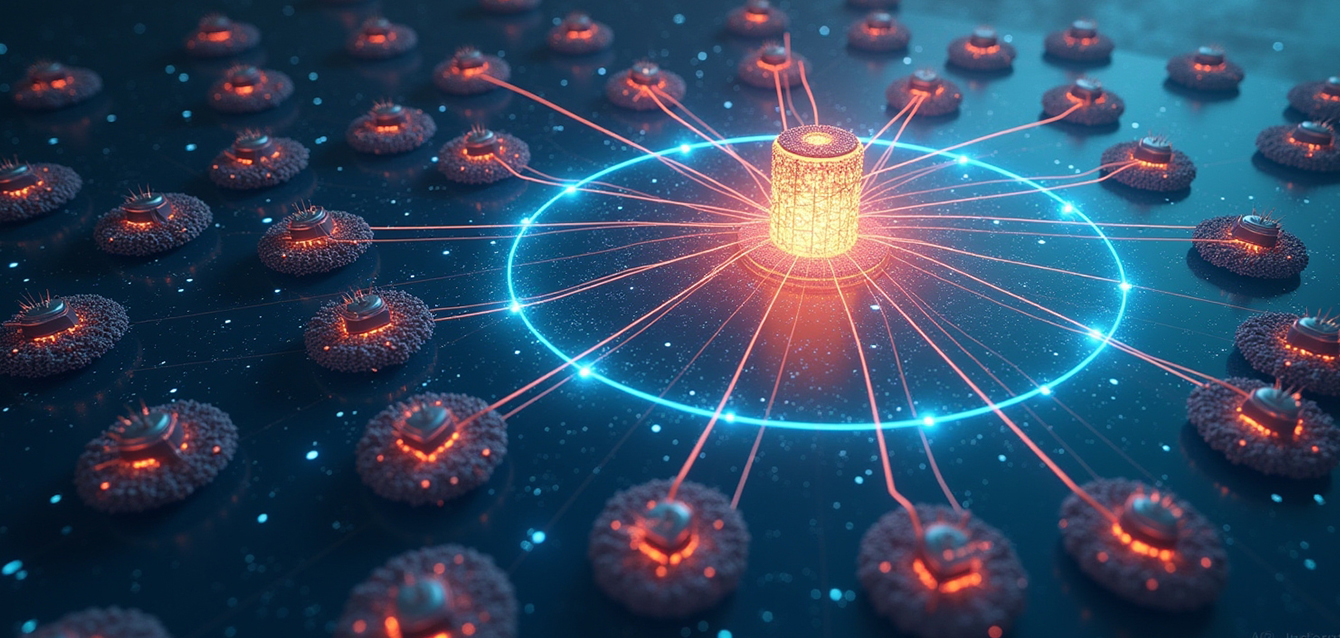

A Hybrid Architecture at the Core of Modern AI

What is RAG? Retrieval-Augmented Generation (RAG) is an architecture that combines two major components of natural language processing:

- Retrieval: an information retrieval step that fetches relevant documents or passages from a knowledge base.

- Generation: a response generation step driven by a Large Language Model (LLM), leveraging the retrieved documents to produce contextualized answers.

This approach overcomes the limitations of fine-tuning by dynamically integrating external knowledge during inference.

The Central Role of Embeddings

Embeddings are vector representations of textual units (sentences, paragraphs, documents) in a dense, fixed-dimensional space (typically ranging from 384 to 1536 dimensions, depending on the model).

They are generated by specialized models, often distinct from LLMs (e.g., sentence transformers, OpenAI text-embedding-3-small, Instructor, GTE, etc.).

How are embeddings used in RAG?

Here are the typical processing steps in a RAG pipeline:

- Semantic Indexing (offline) • Reference documents are segmented into chunks (e.g., 200–500 tokens). • Each chunk is encoded into a vector using an embedding encoder. • Vectors are stored in a vector database (Vector DB) such as FAISS, Weaviate, Qdrant, Pinecone, etc.

- Semantic Search (online) • When a user query is submitted, it is encoded into a vector. • Vector similarity (cosine, dot-product, etc.) is computed between the query and indexed documents. • The top-k most relevant passages are retrieved.

- Augmented Generation • Retrieved documents are injected into the LLM prompt, typically via prompt stuffing (in-context learning) or chain-of-thought techniques. • The LLM generates a response based on retrieved information, resulting in answers that are: o Contextualized o Non-hallucinated o Domain or organization-specific

Typical Technological Stack for an Efficient RAG

| Component | Possible Technologies |

| Embedding Model | OpenAI, HuggingFace (e.g., sentence-transformers), Cohere |

| Vector Store | FAISS, Qdrant, etc. |

| LLM | LLaMA 3/4, Mistral, GPT-4, Claude, etc. |

| Orchestration | LangChain, etc. |

| Cloud Stack | Azure AI Search, AWS Kendra + Bedrock, GCP Vertex AI |